Behavioural Analytics

Academic Publications

An Inventory of Problems–29 (IOP–29) study investigating feigned schizophrenia and random responding in a British community sample

Compared to other Western countries, malingering research is still relatively scarce in the United Kingdom, partly because only a few brief and easy-to-use symptom validity tests (SVTs) have been validated for use with British test-takers. This online study examined the validity of the Inventory of Problems–29 (IOP–29) in detecting feigned schizophrenia and random responding in 151 British volunteers. Each participant took three IOP–29 test administrations: (a) responding honestly; (b) pretending to suffer from schizophrenia; and (c) responding at random. Additionally, they also responded to a schizotypy measure (O-LIFE) under standard instruction. The IOP–29’s feigning scale (FDS) showed excellent validity in discriminating honest responding from feigned schizophrenia (AUC = .99), and its classification accuracy was not significantly affected by the presence of schizotypal traits. Additionally, a recently introduced IOP–29 scale aimed at detecting random responding (RRS) demonstrated very promising results.

(From the journal abstract)

Winters, C. L., Giromini, L., Crawford, T. J., Ales, F., Viglione, D. J., & Warmelink, L. (2020). An Inventory of Problems–29 (IOP–29) study investigating feigned schizophrenia and random responding in a British community sample. Psychiatry, Psychology and Law, 1–20.

Quantifying smartphone “use”: Choice of measurement impacts relationships between “usage” and health

Problematic smartphone scales and duration estimates of use dominate research that considers the impact of smartphones on people and society. However, issues with conceptualization and subsequent measurement can obscure genuine associations between technology use and health. Here, we consider whether different ways of measuring “smartphone use,” notably through problematic smartphone use (PSU) scales, subjective estimates, or objective logs, lead to contrasting associations between mental and physical health. Across two samples including iPhone (n = 199) and Android (n = 46) users, we observed that measuring smartphone interactions with PSU scales produced larger associations between mental health when compared with subjective estimates or objective logs. Notably, the size of the relationship was fourfold in Study 1, and almost three times as large in Study 2, when relying on a PSU scale that measured smartphone “addiction” instead of objective use. Further, in regression models, only smartphone “addiction” scores predicted mental health outcomes, whereas objective logs or estimates were not significant predictors. We conclude that addressing people’s appraisals including worries about their technology usage is likely to have greater mental health benefits than reducing their overall smartphone use. Reducing general smartphone use should therefore not be a priority for public health interventions at this time.

(From the journal abstract)

Shaw, H., Ellis, D. A., Geyer, K., Davidson, B. I., Ziegler, F. V., & Smith, A. (2020). Quantifying smartphone “use”: Choice of measurement impacts relationships between “usage” and health. Technology, Mind, and Behavior, 1(2).

Understanding the Psychological Process of Avoidance-Based Self-Regulation on Facebook

In relation to social network sites, prior research has evidenced behaviors (e.g., censoring) enacted by individuals used to avoid projecting an undesired image to their online audiences. However, no work directly examines the psychological process underpinning such behavior. Drawing upon the theory of self-focused attention and related literature, a model is proposed to fill this research gap. Two studies examine the process whereby public self-awareness (stimulated by engaging with Facebook) leads to a self-comparison with audience expectations and, if discrepant, an increase in social anxiety, which results in the intention to perform avoidance-based self-regulation. By finding support for this process, this research contributes an extended understanding of the psychological factors leading to avoidance-based regulation when online selves are subject to surveillance.

(From the journal abstract)

Marder, B., Houghton, D., Joinson, A., Shankar, A., & Bull, E. (2016). Understanding the Psychological Process of Avoidance-Based Self-Regulation on Facebook. Cyberpsychology, Behavior, and Social Networking, 19(5), 321–327.

How is Extraversion related to Social Media Use? A Literature Review

With nearly 3.5 billion people now using some form of social media, understanding its relationship with personality has become a crucial focus of psychological research.

As such, research linking personality traits to social media behaviour has proliferated in recent years, resulting in a disparate set of literature that is rarely synthesised. To address this, we performed a systematic search that identified 182 studies relating extraversion to social media behaviour.

Our findings highlight that extraversion and social media are studied across six areas: 1) content creation, 2) content reaction, 3) user profile characteristics, 4) patterns of use, 5) perceptions of social media, and 6) aggression, trolling, and excessive use.

We compare these findings to offline behaviour and identify parallels such as extraverts' desire for social attention and their tendency to display positivity. Extraverts are also likely to use social media, spend more time using one or more social media platforms, and regularly create content.

We discuss how this evidence will support the future development and design of social media platforms, and its application across a variety of disciplines such as marketing and human-computer interaction.

(From the journal abstract)

Thomas Bowden-Green, Joanne Hinds & Adam Joinson, 2020. How is extraversion related to social media use? A literature review. Personality and Individual Differences. https://doi.org/10.1016/j.paid.2020.110040

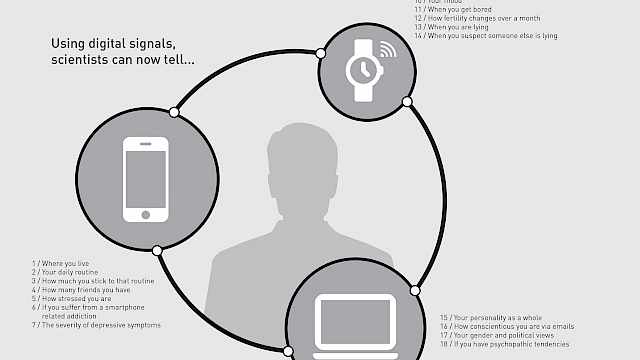

What Demographic Attributes Do Our Digital Footprints Reveal?

To what extent does our online activity reveal who we are? Recent research has demonstrated that the digital traces left by individuals as they browse and interact with others online may reveal who they are and what their interests may be. In the present paper we report a systematic review that synthesises current evidence on predicting demographic attributes from online digital traces. Studies were included if they met the following criteria: (i) they reported findings where at least one demographic attribute was predicted/inferred from at least one form of digital footprint, (ii) the method of prediction was automated, and (iii) the traces were either visible (e.g. tweets) or non-visible (e.g. clickstreams). We identified 327 studies published up until October 2018. Across these articles, 14 demographic attributes were successfully inferred from digital traces; the most studied included gender, age, location, and political orientation. For each of the demographic attributes identified, we provide a database containing the platforms and digital traces examined, sample sizes, accuracy measures and the classification methods applied. Finally, we discuss the main research trends/findings, methodological approaches and recommend directions for future research.

(From the journal abstract)

Joanne Hinds and Adam N. Joinson. 2018. ‘What Demographic Attributes Do Our Digital Footprints Reveal? A Systematic Review’. PLOS ONE, 13 (11): e0207112. https://doi.org/10.1371/journal.pone.0207112.

Human and Computer Personality Prediction From Digital Footprints

Is it possible to judge someone accurately from his or her online activity? The Internet provides vast opportunities for individuals to present themselves in different ways, from simple self-enhancement to malicious identity fraud. We often rely on our Internet-based judgments of others to make decisions, such as whom to socialize with, date, or employ. Recently, personality-perception researchers have turned to studying social media and digital devices in order to ask whether a person’s digital traces can reveal aspects of his or her identity. Simultaneously, advances in “big data” analytics have demonstrated that computer algorithms can predict individuals’ traits from their digital traces. In this article, we address three questions: What do we currently know about human- and computer-based personality assessments? How accurate are these assessments? Where are these fields heading? We discuss trends in the current findings, provide an overview of methodological approaches, and recommend directions for future research.

(From the journal abstract)

Joanne Hinds and Adam Joinson. 2019. ‘Human and Computer Personality Prediction From Digital Footprints’. Current Directions in Psychological Science, https://doi.org/10.1177/0963721419827849.

Can Programming Frameworks Bring Smartphones into the Mainstream of Psychological Science?

Smartphones continue to provide huge potential for psychological science and the advent of novel research frameworks brings new opportunities for researchers who have previously struggled to develop smartphone applications.

However, despite this renewed promise, smartphones have failed to become a standard item within psychological research. Here we consider the key issues that continue to limit smartphone adoption within psychological science and how these barriers might be diminishing in light of ResearchKit and other recent methodological developments.

We conclude that while these programming frameworks are certainly a step in the right direction it remains challenging to create usable research-orientated applications with current frameworks.

Smartphones may only become an asset for psychology and social science as a whole when development software that is both easy to use and secure becomes freely available.

(From the journal abstract)

Piwek, Lukasz, David A. Ellis, and Sally Andrews. 2016. ‘Can Programming Frameworks Bring Smartphones into the Mainstream of Psychological Science?’ Frontiers in Psychology 7. https://doi. org/10.3389/fpsyg.2016.01252.

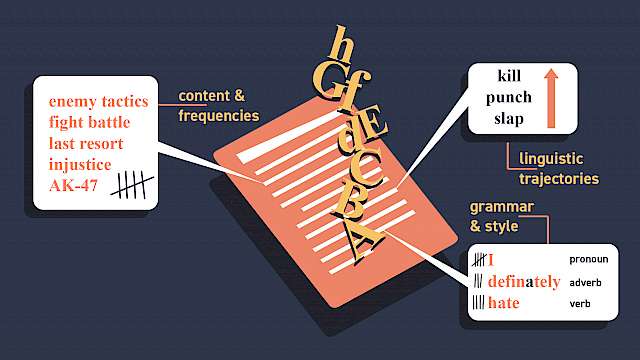

Mimicry in Online Conversations: An Exploratory Study of Linguistic Analysis Techniques

A number of computational techniques have been proposed that aim to detect mimicry in online conversations. In this paper, we investigate how well these reflect the prevailing cognitive science model, i.e. the Interactive Alignment Model. We evaluate Local Linguistic Alignment, word vectors, and Language Style Matching and show that these measures tend to show the features we expect to see in the IAM, but significantly fall short of the work of human classifiers on the same data set. This reflects the need for substantial additional research on computational techniques to detect mimicry in online conversations. We suggest further work needed to measure these techniques and others more accurately.

(From the journal abstract)

Carrick, Tom, Awais Rashid, and Paul. J. Taylor. 2016. ‘Mimicry in Online Conversations: An Exploratory Study of Linguistic Analysis Techniques’. In 2016 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining (ASONAM), 732–36. http://eprints.lancs.ac.uk/80520/1/asonam_mimicry.pdf

Predicting Collective Action from Micro-Blog Data

Global and national events in recent years have shown that social media, and particularly micro-blogging services such as Twitter, can be a force for good (e.g., Arab Spring) and harm (e.g., London riots). In both of these examples, social media played a key role in group formation and organisation, and in the coordination of the group’s subsequent collective actions (i.e., the move from rhetoric to action).

Surprisingly, despite its clear importance, little is understood about the factors that lead to this kind of group development and the transition to collective action. This paper focuses on an approach to the analysis of data from social media to detect weak signals, i.e., indicators that initially appear at the fringes, but are, in fact, early indicators of such large-scale real-world phenomena.

Our approach is in contrast to existing research which focuses on analysing major themes, i.e., the strong signals, prevalent in a social network at a particular point in time. Analysis of weak signals can provide interesting possibilities for forecasting, with online user-generated content being used to identify and anticipate possible offline future events. We demonstrate our approach through analysis of tweets collected during the London riots in 2011 and use of our weak signals to predict tipping points in that context.

(From the journal abstract)

Charitonidis, Christos, Awais Rashid, and Paul J. Taylor. 2017. ‘Predicting Collective Action from Micro-Blog Data’. In Prediction and Inference from Social Networks and Social Media, 141–70. Lecture Notes in Social Networks. Springer, Cham. https://doi.org/10.1007/978-3-319-51049-1_7.