First Things First: Is Misinformation a Problem? (Yes, it is.)

Misinformation has become a buzzword, and many see the proliferation of misinformation and its potential impacts as an issue of substantial contemporary concern. We believe that, by and large, these concerns are justified. However, some argue that misinformation is only a) a small fragment of consumed information, b) a symptom rather than a cause of problems, c) has modest behavioural effects, and d) is nothing new. We disagree with these minimising arguments for several reasons:

While it is true that easily and objectively identifiable misinformation (e.g., ‘fake news’ headlines) makes up only a fraction of people’s information diet, focusing on this subset of misinformation ignores all other types, including subtle misrepresentations and systematic distortions.

Broader societal issues and trends (e.g., social inequality and disenfranchisement; economic uncertainties; low trust in institutions) have likely causally contributed to enhanced misinformation spread and susceptibility. However, just because something is causally influenced by other factors does not mean it cannot have causal impacts of its own. For instance, there is evidence that misinformation has causally contributed to COVID-19 and MMR vaccine hesitancy, disregard for public-health advice, persecution of minorities, and the 2021 storming of the U.S. Capitol.

Measuring the impact of misinformation on behaviour is challenging due to its heterogeneity and the likelihood of being negligible or absent in certain cases (e.g., one-time exposure; low-plausibility misinformation; inconsequential topics). However, even small behavioural impacts can be meaningful at scale. Additionally, these impacts are not always direct; misinformation can indirectly shape people’s views and choices by influencing mainstream media, public discourse, and policy-making in political debates. Moreover, there are likely additional ripple effects, such as diminishing institutional trust, which can further impact behaviour in distinct ways.

People providing false and misleading information is obviously not a new phenomenon. However, the fact that misinformation has long been present does not mean that it is no longer a concern. The misinformation problem has been exacerbated by rapid changes to the contemporary information environment. This is characterised by a growing reliance on the internet and social media as a primary source of information, unprecedented concentration of mainstream-media ownership, and the advent of powerful AI tools.

Misinformation: Psychological Interventions

If misinformation is considered a problem to be addressed in a given context, the question of solutions arises. Solutions need to be multi-pronged; from a policy perspective, there are at least four entry points for intervention:

Regulatory (e.g., legislation, codes of conduct),

Technological (e.g., algorithmic detection of problematic content on social-media platforms),

Educational (e.g., systematic efforts to strengthen media and information literacy),

Psychological (e.g., specific interventions targeting misinformation detection or sharing).

Our research has largely focused on the psychological dimension, where one of the significant issues we encounter is the resistance of misinformation to correction. This resistance stems from the inherent biases in human cognition and the difficulty and error-proneness of updating our memory and revising our existing knowledge, as correcting something that is believed to be true poses a cognitive challenge.

Practitioners must be aware that any intervention risks amplifying misinformation sources and ‘buying into’ their framing of an issue.

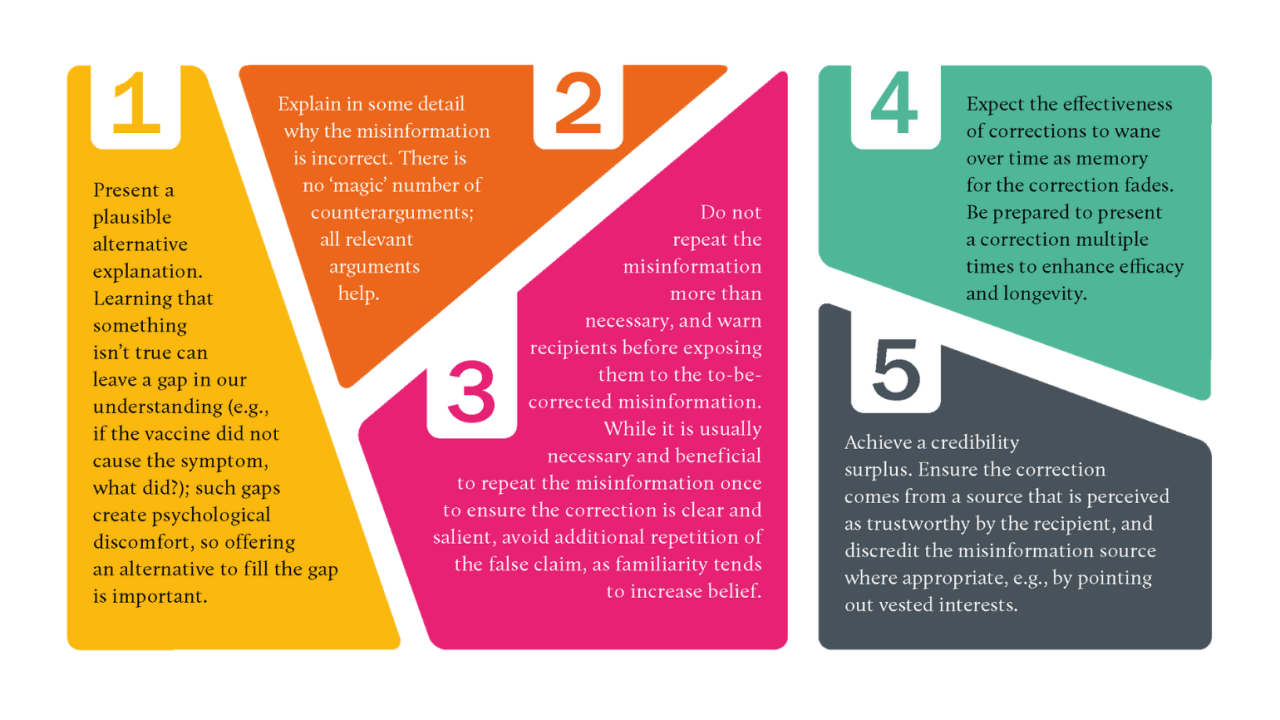

Accordingly, a substantial amount of research by our group and others has explored ways to effectively fact-check or debunk misinformation, which has highlighted important factors to consider. To illustrate, post-exposure corrections of misinformation are most effective when they incorporate the following elements:

Present a plausible alternative explanation. Learning that something isn’t true can leave a gap in our understanding (e.g., if the vaccine did not cause the symptom, what did?); such gaps create psychological discomfort, so offering an alternative to fill the gap is important.

Explain in some detail why the misinformation is incorrect. There is no ‘magic’ number of counterarguments; all relevant arguments help.

Do not repeat the misinformation more than necessary, and warn recipients before exposing them to the to-be-corrected misinformation. While it is usually necessary and beneficial to repeat the misinformation once to ensure the correction is clear and salient, avoid additional repetition of the false claim, as familiarity tends to increase belief.

Expect the effectiveness of corrections to wane over time as memory for the correction fades. Be prepared to present a correction multiple times to enhance efficacy and longevity.

Achieve a credibility surplus. Ensure the correction comes from a source that is perceived as trustworthy by the recipient, and discredit the misinformation source where appropriate, e.g., by pointing out vested interests.

These correction strategies should be incorporated into a larger intervention plan. Ideally, there should be ongoing monitoring of an information environment to enable an informed evaluation of the extent to which specific misinformation pieces are gaining traction and posing a risk of harm. Practitioners must be aware that any intervention risks amplifying misinformation sources and ‘buying into’ their framing of an issue. As such, debunking should only be applied after careful consideration of all potential outcomes.

Alternative strategies

Since debunking can only ever operate retroactively, practitioners should consider alternative strategies. These include active promotion of truthful narratives and factual evidence, competence boosts, and behaviour-oriented nudges.

Competence boosts include educational tools to enhance media and information literacy skills, such as lateral reading, and inoculation interventions that aim to protect consumers from misinformation by explaining the misleading argumentation strategies that disinformants use in their persuasive attacks. Although further research is needed, one potential benefit of this approach is that inoculated individuals may be able to transfer the gained resilience to other topics. For example, understanding that a climate-change-denying argument uses cherry-picking tends to provide some protection against cherry-picked arguments in other domains, such as vaccination.

Behaviour nudges include accuracy prompts that remind the consumer to consider information veracity, the introduction of friction to reduce unwanted behaviour (i.e., sharing misinformation), and the use of social norms to highlight that most people try not to share misinformation and believe sharing misinformation is wrong.

To summarise, targeted corrections that follow our five recommendations can help counter (potentially) harmful misinformation where it arises and begins spreading. However, a whole array of evidence-based psychological strategies is available to practitioners, which cumulatively can contribute to a healthier information environment.

Ullrich Ecker is a Professor of Cognitive Psychology and Australian Research Council Future Fellow; Toby Prike is a Postdoctoral Research Associate; Li Qian Tay is a PhD Student; all authors are at the University of Western Australia’s School of Psychological Science.

Read more

McCright, A.M., Dunlap, R.E. (2017) Combatting misinformation requires recognising its types and the factors that facilitate its spread and resonance. Journal of Applied Research in Memory and Cognition, 6(4), 389-396. https://doi.org/10.1016/j.jarmac.2017.09.005

Lewandowsky, S., Ecker, U., & Cook, J. (2017) Beyond misinformation: Understanding and coping with the “post-truth” era. Journal of Applied Research in Memory and Cognition, 6(4), 353–369. https://doi.org/10.1016/j.jarmac.2017.07.008

Loomba, S., de Figueiredo, A., Piatek, S.J., de Graaf, K., & Larson, H.J. (2021) Measuring the impact of COVID-19 vaccine misinformation on vaccination intent in the U.K. and USA. Nature Human Behaviour, 5, 337–348. https://doi.org/10.1038/s41562-021-01056-1

Bursztyn, L., Rao, A., Roth, C.P., & Yanagizawa-Drott, D.H. (2020) Misinformation during a pandemic. National Bureau of Economic Research. https://www.nber.org/papers/w27417

Simonov, A., Sacher, S., Dubé, J.P., & Biswas S. (2022) Frontiers: The Persuasive Effect of Fox News: Noncompliance with Social Distancing During the COVID-19 Pandemic. Marketing Science, 41(2), 230-242. http://dx.doi.org/10.2139/ssrn.3600088

Buchanan, B., Lohn, A., Musser, M., & Sedova, K. (2021) Truth, lies, and automation: How language models could change disinformation. Center for Security and Emerging Technology. https://doi.org/10.51593/2021CA003

Kozyreva, A., Lewandowsky, S., & Hertwig, R. (2020) Citizens versus the internet: Confronting digital challenges with cognitive tools. Psychological Science in the Public Interest, 21(3):103–56. https://doi.org/10.1177/1529100620946707

Lewandowsky, S., Cook, J., Ecker, U., Albarracin, D., Amazeen, M., Kendou, P., et al (2020) The Debunking Handbook 2020. https://doi.org/10.17910/b7.1182

Lewandowsky, S. & van der Linden, S. (2021) Countering Misinformation and Fake News Through Inoculation and Prebunking. European Review of Social Psychology, 32(2), 348-384. https://doi.org/10.1080/10463283.2021.1876983

Copyright Information

As part of CREST’s commitment to open access research, this text is available under a Creative Commons BY-NC-SA 4.0 licence. Please refer to our Copyright page for full details.

IMAGE CREDITS: Copyright ©2024 R. Stevens / CREST (CC BY-SA 4.0)